Consumer Reports finds unclear, questionable privacy practices and policies among popular mental health apps

Mental Health Apps Aren’t All As Private As You May Think (Consumer Reports):

Mental Health Apps Aren’t All As Private As You May Think (Consumer Reports):

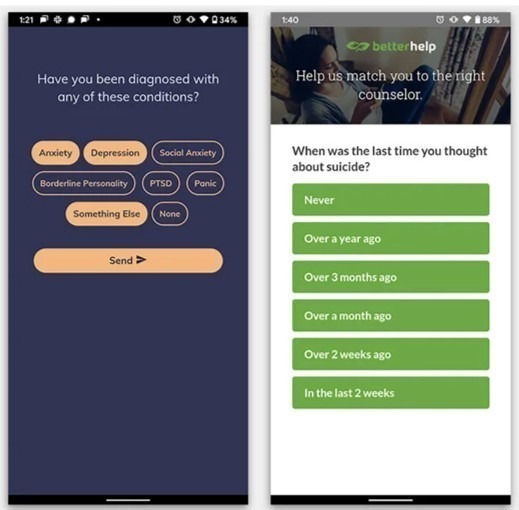

Type “mental health” or a condition such as anxiety or depression into an app store search bar, and you can end up scrolling through endless screens of options. As a recent Consumer Reports investigation has found, these apps take widely varied approaches to helping people handle psychological challenges—and they are just as varied in how they handle the privacy of their users.

… Researchers in Consumer Reports’ Digital Lab evaluated seven of the most popular options, representing a range of approaches, to gain more insight into what happens to your personal information when you start using a mental health app.

The apps we chose were 7 Cups, BetterHelp, MindDoc (formerly known as Moodpath), Sanity & Self, Talkspace, Wysa, and Youper. We left out popular alternatives such as Headspace, which is pitched as a meditation app, although the lines between many apps people turn to for support can be blurry … In general, these mental health services acted like many other apps you might download. For instance, we spotted apps sharing unique IDs associated with individual smartphones that tech companies often use to track what people do across lots of apps. The information can be combined with other data for targeted advertising. Many apps do that, but should mental health apps act the same way? At a minimum, Consumer Reports’ privacy experts think, users should be given a clearer explanation of what’s going on.

About the Report:

Peace of Mind: Evaluating the privacy practices of mental health apps (25-pages; opens PDF).

Conclusion: Mental health apps show many of the same patterns we see elsewhere in data-collecting apps. However, the sensitivity of the data they collect means the privacy practices and policies are even more important—especially during a pandemic where people are relying on these services in greater numbers for the first time. Our evaluation shows how there are multiple ways to evaluate how thoughtfully mental health apps handle user data collection, management, and sharing to third parties.

We call for all apps to improve on the recommendations highlighted in Section 5—adhere to platform guidelines, institute clear explanations of de-identification of data used for research, increase privacy awareness in the main user experience and be transparent about the service providers that receive user data. Some apps may outwardly mention the third party companies they share in their privacy policies while others may not mention any. Some apps may create clear ways to delete one’s data through the mobile app, while others may limit this user right to California residents based on CCPA. Some apps over collect data such as geolocation which is not necessarily for the app to function. Consumer Reports recognizes there are many nuanced design and data governance decisions that factor into offering high-quality and private-by-design service. By comparatively evaluating popular apps, we can clarify how companies can continue to raise the standard in this emerging category, ensure that consumers consent to the collection and sharing of sensitive mental health data, and ensure that consumers can trust in these services to be good stewards of their data.

News in context:

- A call to action: We need the right incentives to guide ethical innovation in neurotech and healthcare

- The National Academy of Medicine (NAM) shares discussion paper to help empower 8 billion minds

- Researchers propose four “neurorights” to harness neurotechnology for good: cognitive liberty, mental privacy, mental integrity, and psychological continuity

- Neurotechnology can improve our lives…if we first address these Privacy and Informed Consent issues